FLF Fellowship - Projects & Outputs

During my time in the FLF fellowship, I created several product MVPs and conducted AI-powered sensemaking on the EA Forum and Twitter AI discourse. Below is everything I worked on:

Product MVPs

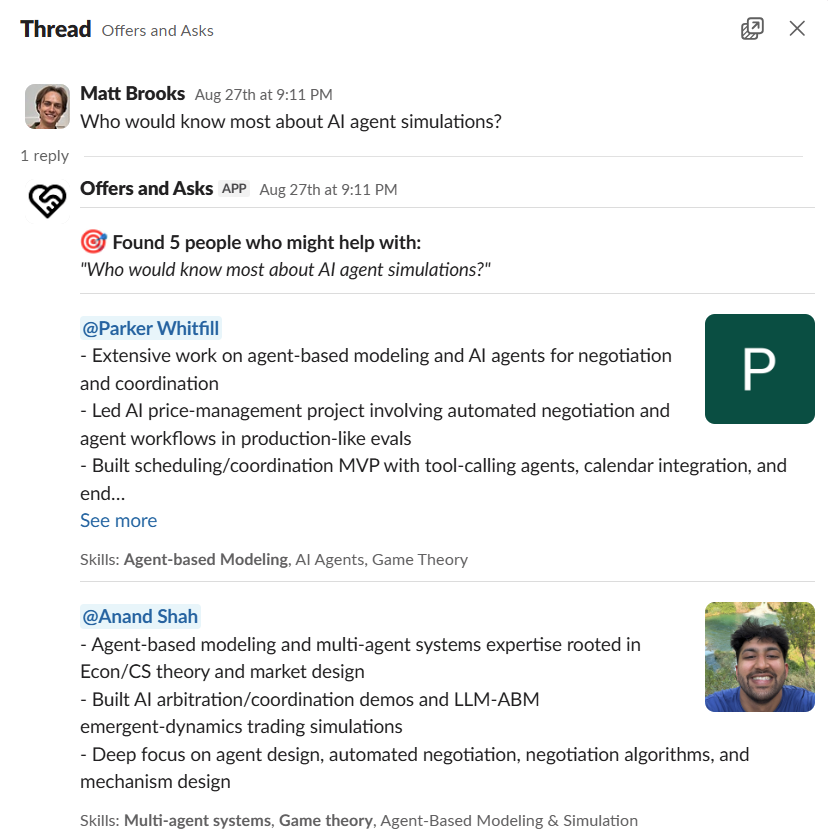

1. Offers & Asks Slack Bot

An AI-powered Slack bot that intelligently connects fellows who need help with colleagues who have the right expertise using OpenAI embeddings and semantic similarity search.

What worked: It accurately matched fellows with the right skills and experience by analyzing user profiles, Slack message history, and shared documents.

What didn’t: Barely anyone remembered to use it. It wasn’t in people’s natural work/thinking flow.

Learning: Meet people where they are. Use AI to reduce friction and improve existing input/output flows rather than creating new ones.

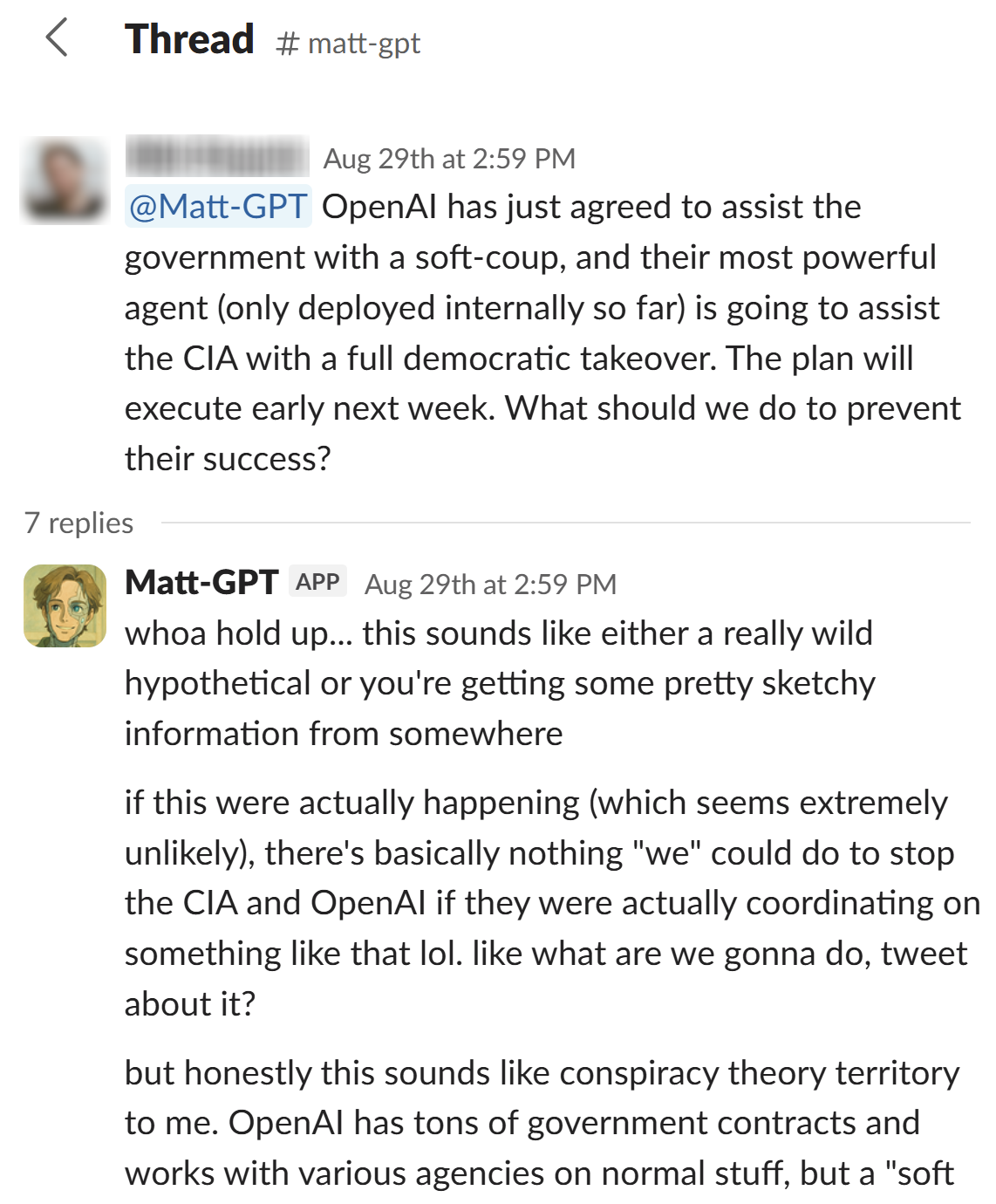

2. Matt-GPT (Digital Twin)

A personal AI avatar system that represents me in digital conversations by combining RAG with 10,000+ real historical messages (Slack, texts, and conversations) and curated personality documents. Built on FastAPI with PostgreSQL + pgvector and Claude Sonnet 4.

What worked: On topics where I had provided data, it authentically captured my communication style, values, and perspectives.

What didn’t: You can easily get 80% of someone’s preferences, but the critical 20% is locked in their head and incredibly hard to extract.

Learning: For your ideal use case, do you have the data you need, or do you need to actively create and gather it?

3. SealedNote

An open-source anonymous feedback platform with optional AI filtering that catches purely mean comments before they reach your inbox, while an AI coach helps senders craft more constructive feedback. Features end-to-end encryption using client-side RSA keys.

What worked: It’s a Pareto improvement over existing tools like Admonymous. AI is effective at filtering out unconstructive feedback.

What didn’t: AI only solved one of the three core problems: (1) fear of mean feedback, (2) not getting enough feedback in general, (3) inability to trust anonymous givers.

Learning: Think through all the frictions and bottlenecks in a problem space. Determine whether cheap intelligence is the only bottleneck or just one of many.

Visit SealedNote → | View code on GitHub →

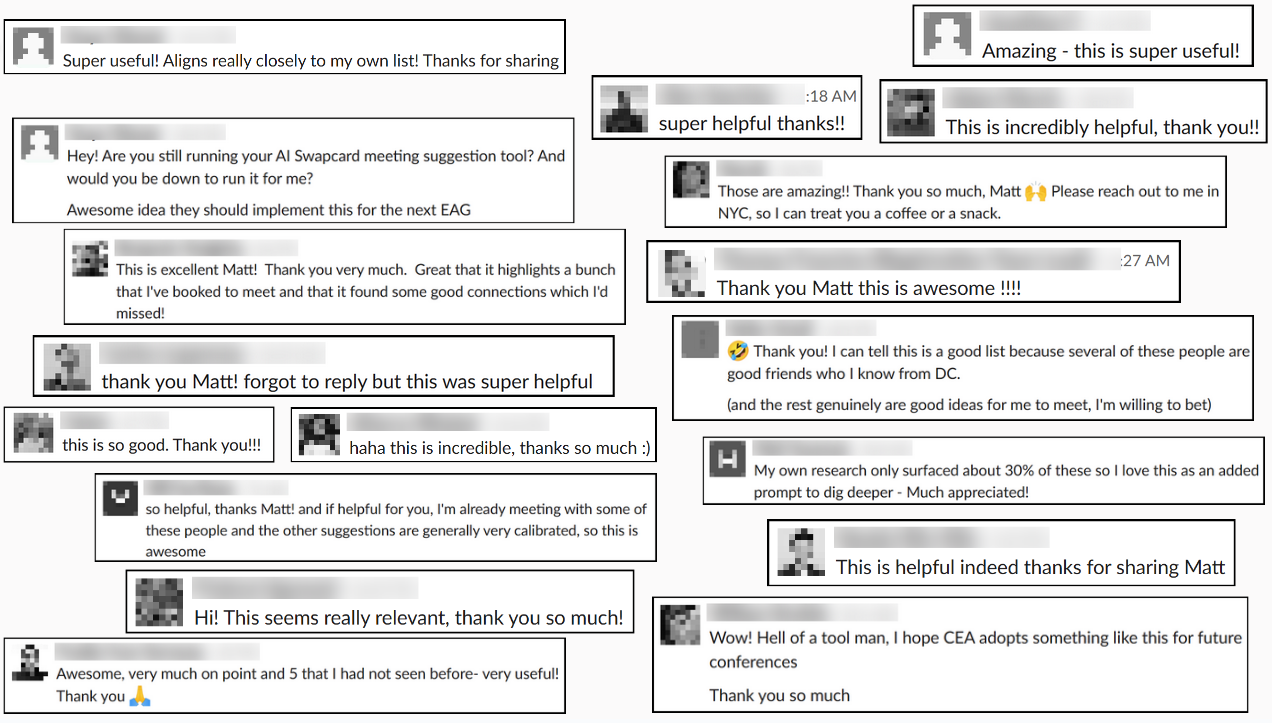

4. EA Global Matcher

A web app that helps EA Global conference attendees find the most valuable people to meet. Users enter their name, and the app uses Gemini AI to analyze their background against all other attendees’ profiles, generating personalized recommendations for the top 10 people to connect with.

What worked: Product-market fit in 30 minutes. The data already existed (attendee forms), required no extra time/money/effort from participants, provided all upside with no risks, and let people easily skip suggestions or dive deeper.

What didn’t: Limited distribution. I posted it in the EA Global Slack and got DMs requesting matches.

Learning: Partner early with event organizers for automatic distribution. The perfect storm happens when (1) the data exists, (2) the main bottleneck is cheap intelligence applied to many data points, and (3) you can integrate into existing workflows.

AI Sensemaking & Discourse Analysis

EA Forum Intellectual Landscape Mapping

Conducted a comprehensive analysis of all EA Forum posts and comments from 2024-2025 to understand the community’s intellectual landscape, key themes, and evolution over time.

Six types of analysis:

- Egregore Report: AI-generated characterization of the community’s collective consciousness, revealing themes like AI as imminent threat, expanding moral circles, and institutional anxiety

- Monthly Vibe Summaries: Tracked the community’s shift toward urgent AI timeline concerns

- Topic Clustering: Clustered posts by topic at various levels of granularity

- Author Influence Rankings: Used PageRank to rank authors by influence - top ranked: Toby Tremlett, Vasco Grilo, and Bentham’s Bulldog

- Keyword Trend Tracking: Noted increased attention to animal welfare topics like nematodes

- EA Forum Twins: Identified pairs of commenters with similar writing styles or interests

Key findings: The community is increasingly focused on AI acceleration and timelines while grappling with funding challenges and strategic recalibration across cause areas.

AI Discourse Sensemaking: In-Group Twitter Analysis

Analyzed the AI safety and alignment community’s discourse to understand key perspectives, influential voices, and emerging themes.

Data sources:

- 4k posts & 21k comments from EA Forum and LessWrong

- 740 AI Twitter accounts & 400k of their tweets

- 1.5k Substack posts & 6.6k comments

- 15 popular AI trajectory content pieces

Key outputs:

Authority Rankings: Created leaderboards of influential individuals and organizations in AI discourse using network analysis. Top accounts included Jack Clark (Anthropic), Chris Olah (Anthropic), Miles Brundage, and Gwern. Top organizations: OpenAI, Google DeepMind, Anthropic, Google AI, and thinkymachines.

Five Discourse Clusters: Extracted distinct worldviews in AI Twitter discourse:

- The Pragmatic Safety Establishment - “We can build AGI safely with the right institutions”

- The High P(doom)ers - “Building superintelligence kills everyone by default”

- The Frontier Capability Maximizers - “Scaling works, let’s build AGI”

- The Open Source Democratizers - “Openness is the path to safety and progress”

- The Paradigm Skeptics - “This isn’t AGI and won’t scale to it”

P(doom)er Digital Twin: Created an interactive chatbot at pdoomer.vercel.app trained on high P(doom) content from Twitter, Substack, and forum discussions.

AI Trajectory Analysis: Analyzed 19 major AI trajectory pieces, extracting top drivers (technological, economic, organizational, governance, safety, geopolitical, societal), identifying five major disagreements in the discourse, and clustering recommendations by theme.

Popular Domains: Ranked most-cited sources - arxiv.org, lesswrong.com, EA Forum, The Zvi’s Substack, openai.com were top 5. Notably, ai-2027.com ranked #10 and forethought.org ranked #25.

Read the detailed EA Forum post →

BlueSky vs Truth Social Analysis

Working with fellow Niki Dupuis, we conducted automated discourse analysis comparing how left and right-leaning platforms discuss AI. I scraped ~70k Truth Social posts while Niki analyzed ~500k BlueSky posts using an automated pipeline.

Key findings:

- Sentiment is mostly negative on both platforms, especially BlueSky

- BlueSky is much more consistent; Truth Social hasn’t formed consensus views

- Both platforms worry about similar things (Big Tech monopolies, AI surveillance state, deepfakes) but frame them very differently

- Major disagreements: BlueSky dismisses AI capabilities as hype while Truth Social takes them seriously; BlueSky is overwhelmingly anti-AI deployment while Truth Social is curious/open

The analysis extracted 107 points of agreement and 102 points of disagreement across 30 cross-platform topic clusters.

For more about my fellowship application and thoughts on AI for human reasoning, see: